The Bridged Edition

Some years ago, I had a thought about abridged and unabridged books. I wondered what would happen if we took the abridged version and subtracted it from the unabridged one.

What would be left is a new thing. The “bridged” edition. All the words nobody wants.

I wrote some code and tried this with several books – but abridging involves too much rewriting, the diffs were nonsense.

I had read the British and American version of Harry Potter and wondered if there might be difference beyond the normal loo becoming toilet, jumper becoming sweater, and motorbike becoming motorcycle.

I wondered if the bridged book code would work here.

tldr; check out the US-UK comparisons.

Dean Thomas

I expected mostly small dialect changes. Then my script produced this:

| UK Version | US Version |

|---|---|

| And now there were only [three] people left to be sorted [] Turpin, Lisa” became a Ravenclaw and then it was Ron’s turn. | And now there were only [four] people left to be sorted [Thomas, Dean,” a black boy even taller than Ron, joined Harry at the Gryffindor table] Turpin, Lisa,” became a Ravenclaw and then it was Ron’s turn. |

That’s … strange.

This description of Dean Thomas appears only in the American version. Was it in the original manuscript and later cut in the UK? Did the US editors ask for it to be added? I do not know.

Update: I spent some more time searching and found this explanation:

This was an editorial cut in the British version; my editor thought that chapter was too long and pruned everything that he thought was surplus to requirements. When it came to the casting on the film version of ‘Philosopher’s Stone’, however, I told the director, Chris, that Dean was a black Londoner. In fact, I think Chris was slightly taken aback by the amount of information I had on this peripheral character. I had a lot of background on Dean, though I had never found the right place to use it. His story was included in an early draft of ‘Chamber of Secrets’ but then cut by me, because it felt like an unnecessary digression. Now I don’t think his history will ever make it into the books.

The Code

I wrote a pipeline to compare the books. Ebooks are messy, so I had to clean them up before I could diff them.

Here is what I used:

- Calibre: I used

ebook-convertto extract raw text from different ebook formats. - Normalization: I used

ftfyto fix Unicode problems. I also wrote regex rules for things like honorifics. The UK uses “Mr” while the US uses “Mr.”. - Comparison and ignoring: The tool walks through the books word by word. It checks a list of known dialect swaps and ignores pairs like “color” and “colour”, so the output is not flooded with spelling noise. I also used Double Metaphone. If two words sound the same, the script ignores them.

What Else Changed?

Here are a few odd changes among many:

Chamber of Secrets: Apparition Explanation

The US version adds a whole world-building segment that’s not in the UK version.

| UK Version | US Version |

|---|---|

| And even underage wizards are allowed to use magic if it’s a real emergency, section nineteen or something of the Restriction of Thingy…” [] Harry’s feeling of panic turned suddenly to excitement. “Can you fly it?” | And even underage wizards are allowed to use magic if it’s a real emergency, section nineteen or something of the Restriction of Thingy – ” [“But your mum and dad…” said Harry, pushing against the barrier again in the vain hope that it would give way. “How will they get home?” “They don’t need the car!” said Ron impatiently. “They know how to Apparate! You know, just vanish and reappear at home! They only bother with Floo powder and the car because we’re all underage and we’re not allowed to Apparate yet…”] Harry’s feeling of panic turned suddenly to excitement. “Can you fly it?” |

Chamber of Secrets: Lucius Malfoy’s Entrance

The US version gives Lucius Malfoy a rougher entrance. It explains why he looks disheveled – Dobby was wiping his shoes. The UK version cuts this context.

| UK Version | US Version |

|---|---|

| [This entire section is missing] | Apparently Mr. Malfoy had set out in a great hurry, for not only were his shoes half-polished, but his usually sleek hair was disheveled. Ignoring the elf bobbing apologetically around his ankles… |

Prisoner of Azkaban: Dementor Release

The US version is more explicit about the Dementor letting Harry go.

| UK Version | US Version |

|---|---|

| Facedown, too weak to move, sick and shaking, Harry opened his eyes. [] The blinding light was illuminating the grass around him. | Facedown, too weak to move, sick and shaking, Harry opened his eyes. [The dementor must have released him.] The blinding light was illuminating the grass around him. |

Deathly Hallows: The Ear Joke

There’s an ear-joke adjustment.

| UK Version | US Version |

|---|---|

| Not so fast, [Lugless] said Fred, and darting past the gaggle of middle-aged witches heading… | Not so fast, [Your Holeyness] said Fred, and darting past the gaggle of middle-aged witches heading… |

The Reports

I generated interactive reports for all seven books. I’ve left out all the Mom/Mum and Color/Colour changes but otherwise, you can use the filters to see the actual additions and removals.

- Book 1: The Sorcerer’s/Philosopher’s Stone

- Book 2: The Chamber of Secrets

- Book 3: The Prisoner of Azkaban

- Book 4: The Goblet of Fire

- Book 5: The Order of the Phoenix

- Book 6: The Half-Blood Prince

- Book 7: The Deathly Hallows

I am working on generalizing the code so I can use it for other comparisons soon. If you find any other odd differences in the reports, let me know.

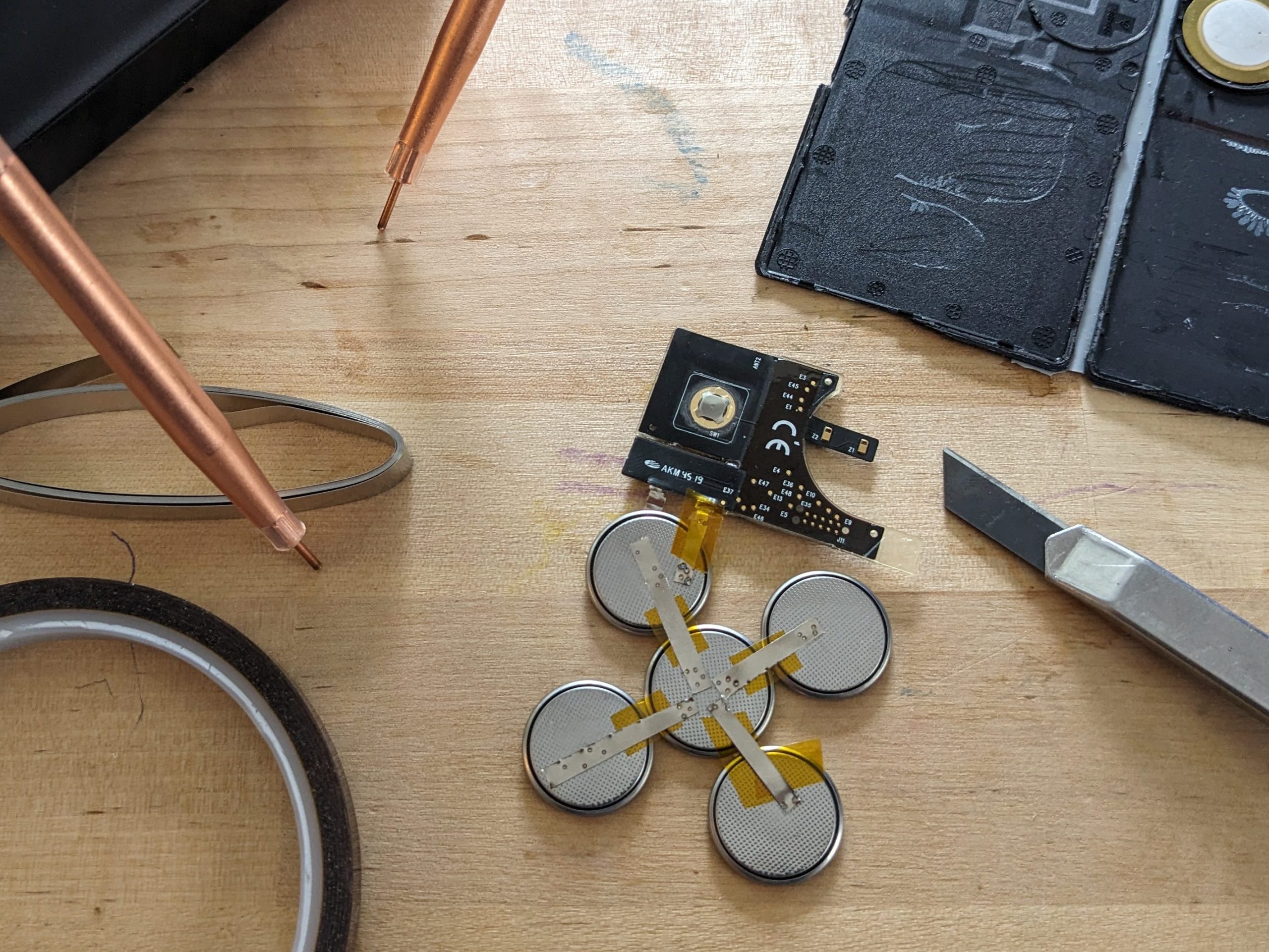

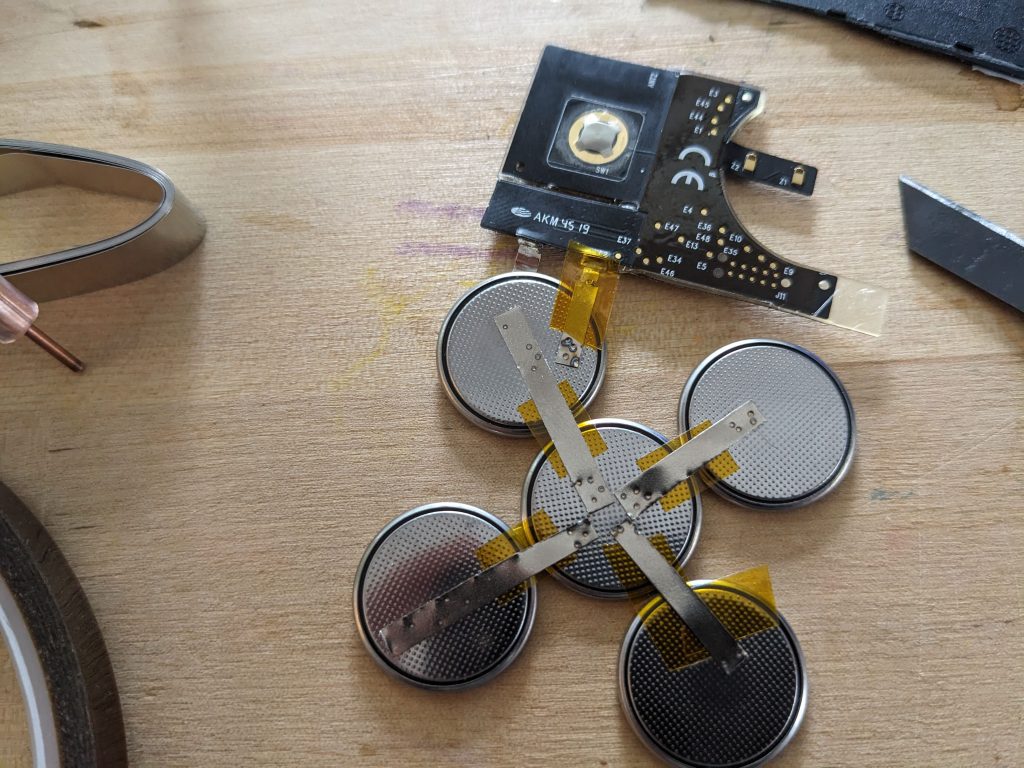

Replacing the 'Non-Replaceable' Battery in the Tile Slim

I replaced the battery in my Tile Slim! It uses a little tiny pouch cell (Ultralife CP114951 3.1v 380mAh).

Be careful, this is going into scary lithium battery territory. I shaved the plastic from the edges of the Tile Slim until I could peel the plastic shell apart; if I were to do it again, I think that I would sand the edges using some 80 or 120 grit sandpaper. The pouch cell inside is protected by a thin sheet 0.0015″ (0.0381mm) of stainless-steel top and bottom that’s glued in.

After you get into the package, you’ll need to trim off a bit of the rubber around the pouch cell terminals. Trim the terminals as close to the edge of the pouch cell as possible without cutting the pouch cell, while also not shorting your scissors across the terminals!

The pouch cell plus the stainless-steel plus the glue is about 0.0580″ (1.48mm). I wanted to stick close to that but figured going over a bit was ok. With a bit of measuring I figured out I could just fit five CR2016 0.787×0.0629″ (20×1.6mm) batteries in parallel in the case in a “X” configuration.

So, I got out the battery welder and cut some thin nickel strips and started welding. I applied some little strips of Kapton tape to make sure nothing shorted.

I had to add some little extensions to the tabs on the Tile board and then welded the batteries onto the tile board. Finally, a bit of packing tape closes the case back up! I could glue it back together but seems like a pain to undo in a couple years when the batteries need to be replaced again.

Instagram Reconstituer

Instagram is never going to get better. The same goes for all the big social sites—Facebook, Xitter, Reddit, and the like. Sure, there’s some enshittification happening, but it’s mostly about them keeping your eyes glued to a feed full of low-quality ads and posts from strangers. A friend might send you a link, and before you know it, you’ve spent half an hour scrolling through junk.

So, I finally deleted my Instagram account. I didn’t want to be a product they could sell or deal with all their pandering politics. And with Instagram getting worse every day, the choice became easier.

But I wasn’t ready to lose my Instagram entirely. It’s a neat slice of my history, so I exported all my data. What I got was a mess—450 JSON files and 4500 other files that aren’t very easy to browse. On top of that, some image files had the wrong extensions.

I first thought about making one HTML file you could just drop your export into. But thanks to modern browsers and their CORS rules, that wasn’t possible because the JSON files couldn’t be read directly. So, I ended up writing a PHP script that processes all the JSON, video, and image files to build a single HTML file along with a folder of images.

The PHP script grew into a jumble of fallbacks and error checks just to handle all the quirks in the data. I’m convinced they made the export as unwieldy as possible on purpose. Many HEIC files are actually JPGs with the wrong extensions, many videos lack thumbnails, and so on. It’s a perfect example of how platforms make it hard to leave—even when they let you “take your data with you.”

My tool—named Memento Mori (a bit overdramatically)—takes all those files and builds a neat website you can host on your own server. It even shows a date span from your first post to your last post. It feels a bit odd to recreate Instagram’s look to get away from Instagram, but I wanted to make it easier for folks to break free.

Anyway, here’s my export.

Documenting a Block

I’m trying to document an entire block for a project with Sarah (and maybe eventually an entire street), but trying to capture a continuous view of a block. If you take a series of photos while driving (stop, shoot, drive forward, stop, shoot,….), objects at different distances shift positions between frames making stitching together a cohesive photo difficult — aka the parallax problem.

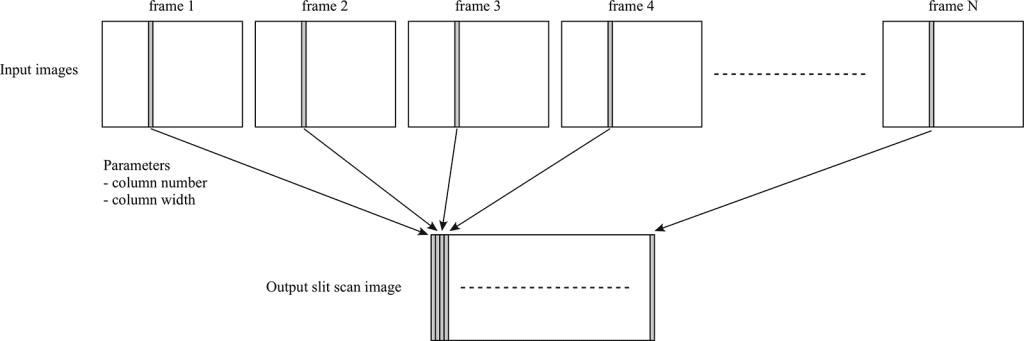

I’m trying a slit-scan technique that captures thin vertical strips from a moving video, then assembles them side-by-side to create a continuous image.

It’s all a bit messy to make this actually happen, you have to slice up the video and then reassemble the video into a single image. Also in this case, the car was moving more than one pixel per frame so, I had to figure out how far the car moved for every frame of video — about 65px. Which is all good, but my speed wasn’t perfectly consistent so that stretches/shrinks the image unevenly.

The thing though that always strikes me about trying to record pictures of houses, there are cars, telephone poles, trees, etc. in the way. I had the idea that I could get several different points-of-view by building the panorama from the left, left-center, center, right-center, and right of each frame of video, and then combining those panoramas I could see behind the cars and trees. Buuut, turns out the perspective shift is really too much, does make a cool gif tho.

Here’s the video that the slitscan/gif came from.

I have a bit of code to share for this, but it’s all pretty hacky. Stripe.sh takes a folder of tif files and cuts them into little strips. If you need to generate your tifs it’s pretty easy with ffmpeg, or you could tweak the stripe.sh to work directly from a mp4

ffmpeg -i input.mp4 frames/%09d.tifThe second bit of code takes those stripes and assembles them, there are several options for overlap and testing.

Editing Wikipedia

Is “Big Al” short for “Big Alabama”? (Big Al is the elephant shaped mascot for the University of Alabama’s various teams.)

So I go and try to determine what “Al” is actually short for. UA’s websites? Not helpful AT ALL.

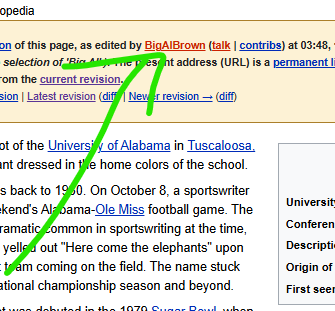

So I go read the Wikipedia page about Big Al, and you know when you read stuff on Wikipedia and you’re like “something feels off”?

“Big Al was named by a student vote. At the time of the vote, there was a popular DJ on campus by the name of Al Brown, who DJ’d many of the largest campus parties, including those hosted by members of the football team. As a result of DJ Al’s popularity, a campaign was started on campus to name the mascot after him, and that campaign succeeded at the polls.”

I’m like, that sounds like PURE NONSENSE.

So I start digging. Want to find out when this piece of information was added to Wikipedia. I go to the history and start clicking each year to see when the info appears.

2006… 2007… 2008… 2009… 2010… OK it appears in 2009. Look at every month and…

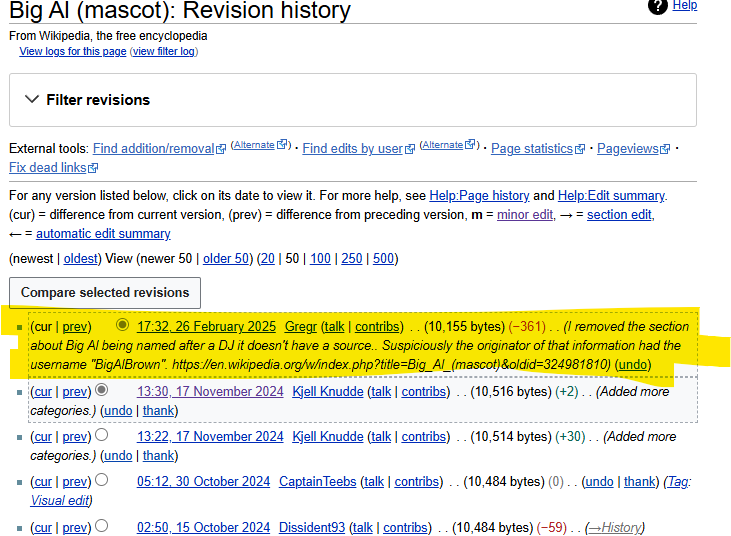

SOME DUDE NAMED BIGALBROWN edited the Wikipedia to add information about how Big Al was named after “Alfred Lee ‘Big Al’ Brown” which is TOTAL GARBAGE.

So I’m like, OK I can play this game.

I go look at ol’ Al’s Wikipedia user page, and they’ve only made ONE EDIT EVER, immediately after making their account, which is to add in this nonsense!

So I’m like, alright Imma go read the archives. I get on the UA library website and start trawlin’ through yearbooks from the correct time etc.

Nothing. Nothing. Nothing.

I turn to Google, and there are a lot of articles written AFTER 2009. So all these real news outlets have been fooled by this stupid Wikipedia article! CBS Sports and whoever else, all writing about how Big Al is named after this DJ.

So I BE THE CHANGE and remove that info from Wikipedia. Sorry, MR. AL. Your ruse is up!

So I think Big Al is actually named for the elephant that UA used to have ☠️ named “Alamite.”

Anyway, fun day in editing Wikipedia.

1/9