Translations of Photographs with "timbrooks/instruct-pix2pix"

January 2nd 2025

I’ve been interested in the conversion of photographic/video imagery to something that looks more like a drawing or painting for many years, about 10 years ago I started experimenting with piping video as frames through Photoshop manipulating the video so that it had a variety of non-photographic effects.

The issue with the Photoshop manipulations is that there’s no way for photoshop to understand the content of the images in a meaningful way — the pixels that make up a face are as relevant to the output as the trees in the background. For the drawing experiments I got around that to some degree by using a white backdrop, but the issue remains. (I know, I know, I need better testing footage, but sometimes you just want to start, and you shoot something)

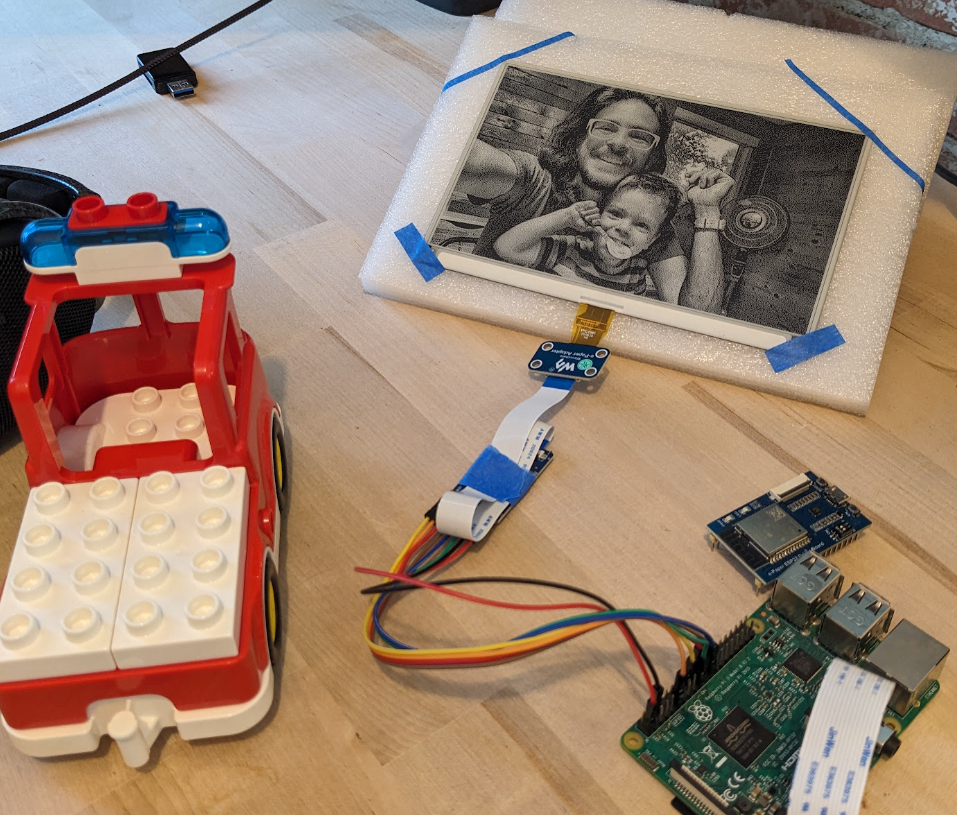

I recently started looking back into image translation while playing around with making an Epaper picture frame. The Epaper display I picked out can only display black OR white, so you’re stuck with some kind of dithering to emulate grays or maybe if you could create a drawing, you’d be able to just use the black and white.

I played with a few online tools, and they’ve definitely gotten better. There are some that respond to the content of the image in a way that is pretty interesting, though the styles they have available are pretty limited, and of course you don’t really have much control over what they are doing.

I was flipping through Reddit posts about creating drawings from photos but they were mostly just “use website x, y, or z”. I did find a post that mentioned Automatic1111, but it’s kind of a mess to work with. Though reading more about Automatic1111 brought me to InstructPix2Pix, and on their Github info about how to run it in Python!!

Now I had everything I needed to run some initial experiments, so I wrote a bit of code to take art styles (Expressionism, Cubism, Art Nouveau, Technical Drawing, Scientific Illustration, Pointillism, Hatching, Chiaroscuro, and on and on) and mashed the words up with a list of art adjectives (textured, smooth, rough, dreamy, mysterious, whimsical, flowing, static, rhythmic, and on and on) so I could give InstructPix2Pix a prompt like “Make this photograph into an Art Nouveau work that’s textured and whimsical.” I ran 500 variations and looked them over. Some were interesting, most were not.

I have found some really odd and funny issues, if you prompt InstructPix2Pix with something like “Make this in the style of van Gogh” a lot of the time it’ll make the people in the picture into van Gogh.

I took some interesting style and adjective variations and started iterating on them, but the process wasn’t easy. So, I decided to write a bit of code to create a sort of prompt tree. The user enters a prompt, and with a call to OpenAi we transform that into 5 similar prompts each generating a picture, the user selects their favorite picture and maybe adds a bit more direction, then 5 more pictures are generated, and so on. This part is still a work in progress, but hopefully some code on Github soon.

Finally, I’ve tried applying some of these styles to video. Results are interesting but not consistent across frames. I think with more work I might be able to increase consistency — very tight and direct prompting generated by the prompt tree above will be helpful, also some additional preprocessing of frames to remove noise and increase contrast will help.